This is Part 2 in a series about neuron based online learning systems. Part 1 can be found here.

Leveraging Novelty

The ultimate goal with this series is to introduce a new type of neural network that can continuously self improve with a neuroscience grounded approach. While this seems a tall order, this problem can be broken down into a system that generates error and then uses that error to reduce future error. In the last post, we introduced NeuroBlocks. NeuroBlocks are combinations of neural circuits, allowing us to define modular units that can be reused across a network. In this post, we are going to extend our NeuroBlocks and add mechanics for integrating network change while actively processing sensory information. We will push forward with a focus on the neocortex and start our discussion with its physical constraints on NeuroBlocks.

Structure Of The Network

The neocortex is a wrinkled sheet of tissue wrapping the brain. That sheet has distinctive layers with varying thickness and composition of connectivity. Although this is a bit of a simplification, neurons are individually small and can move within the 3D structure of the brain during development. Columns however, are large and usually occupy the full thickness of the neocortical sheet.1

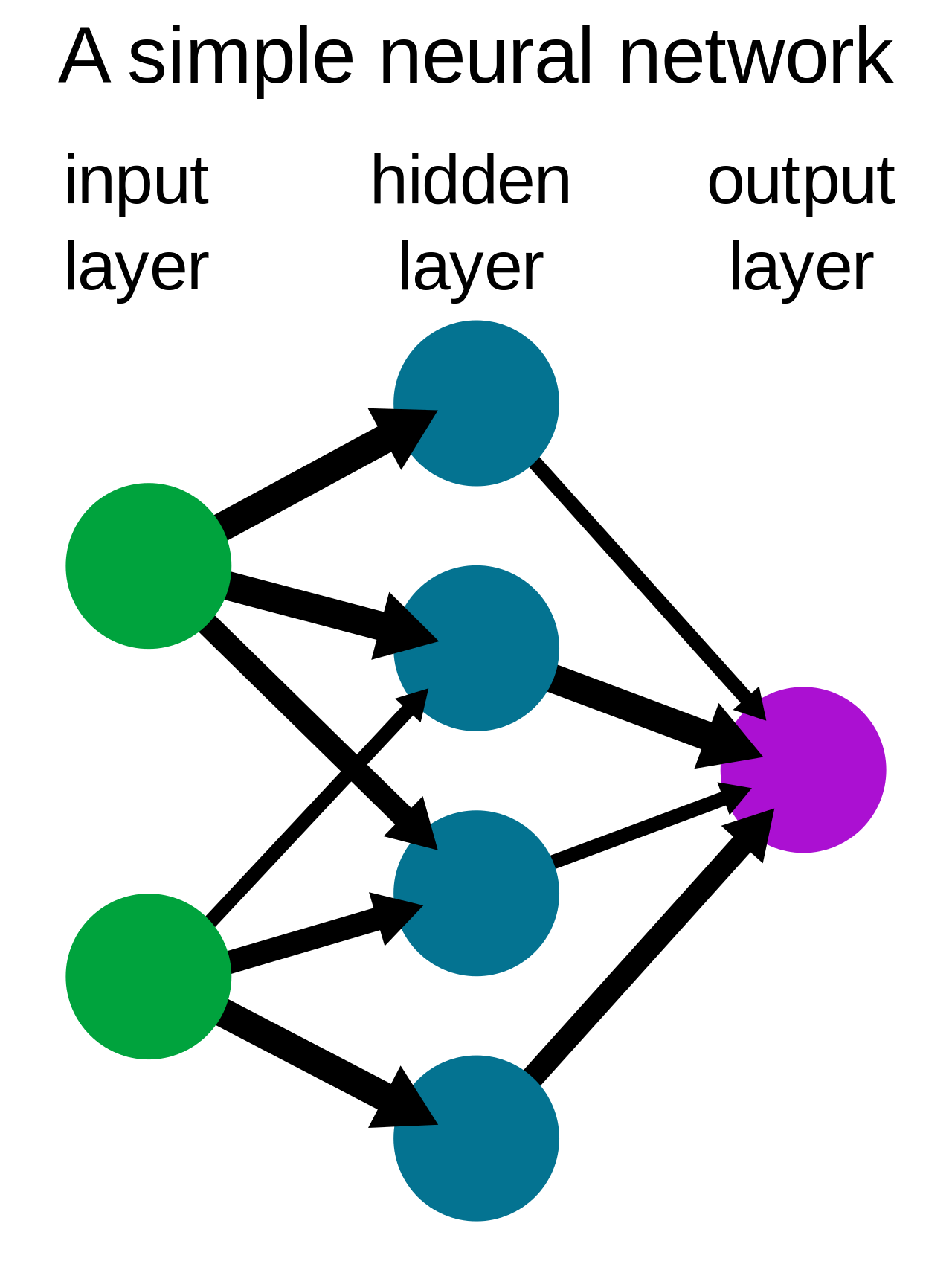

As we build an emulation of the neocortex, we utilize NeuroBlocks as an approximation of cortical columns. Within the columns, the layers relate to different structural components of the NeuroBlock such as the loops. Like cortical columns, we need to apply physical constraints on the movement and connectivity of NeuroBlocks. This isn’t a needless mimicry, but instead provides vital stability that we will explore in the future. Taking these details together, NeuroBlock connectivity occurs in two dimensions. We can approximate these neural networks with diagrams like those found in mainstream artificial intelligence that have a similar 2D structure. Figure 2 is such a diagram with inputs and outputs.2

In contrast to machine learning networks, the neocortex we are building has no predefined connectivity or layers. As we will show, network structure is grown organically.

This flexibility comes at a price, as connectivity can skip layers or even change the flow back towards the inputs. The connectivity complexity is limited such that NeuroBlocks tend to connect to nearby NeuroBlocks. NeuroBlocks tend to “wire together when they fire together”, but also when they are physically proximate. To better understand this, let us consider what these NeuroBlocks represent.

Patterns in the network

The primary function of a NeuroBlock is to detect certain patterns and produce stimulations to different NeuroBlocks depending on the pattern. Let us forget individual neurons and imagine a network composed entirely of NeuroBlocks. In this network, each block captures a different pattern. When we say pattern, we mean some collection of neural activity that could be the word "one", the word's sound, an image of the numerical symbol, or all of the above.

Another way of thinking about patterns in the network is as abstractions. A NeuroBlock represents a different abstraction that can be leveraged or reused by other NeuroBlocks. While thinking about abstractions is fairly meta, this framing starts to capture the reuse and hierarchy of patterns in the network. The activity of a given NeuroBlock represents the presence of that abstraction. In this way, abstractions can be anything from partial words to the integration of smells and sights. Bringing us back to the basics, since NeuroBlocks connect in a two-dimensional structure to physically proximal NeuroBlocks, the patterns that are captured trend towards more abstract as the network grows away from the inputs.

If this network were manually architected, it might be tempting to force the network to capture "word" without so many intermediate NeuroBlocks. This might seem inefficient, but this would be missing the forest for the trees. These smaller patterns are powerful due to their reusability. The patterns in Figure 4 could be reused in different words, such as "work" or "board". To help us describe these relationships, we will describe word as a superpattern for wor and rd and conversely, wor and rd are subpatterns to word.

In Figure 4 activity flows from the left to the right, culminating in the activation of word. By thinking about Figure 4 as a small part of a larger network, the resulting activity in Figure 4 can then be leveraged in other patterns, such as in sentences.

Let us look at another example where we do not yet have a network. Like our previous error that detects when a failed pattern exists, what if we could determine when a NeuroBlock activates without a superpattern? To state that another way, can we produce error when a NeuroBlock is not further abstracted? A simple way to accomplish this is by providing feedback from higher level patterns.

Figure 5 depicts how the pattern "cat" might be captured. If higher level patterns signal to lower level patterns. We can add NeuroBlocks capturing patterns of physically proximate errors until the error converges to a single NeuroBlock. In this case cat.

Figure 5 shows growth over time if c, a, and t are continuously activating. These activations lead to our new error without backwards feedback. The first NeuroBlock to be added is ca, which forms and provides feedback, shutting down the error in c and a. Next, ca and t are in error because they are not yet abstracted into a new pattern. This leads to the formation of cat that in turn provides feedback to its subpatterns, leaving cat as the only NeuroBlock in error. Let us call this new error abstraction error. When a NeuroBlock doesn’t receive superpattern feedback, it will produce abstraction error. To understand this better, let’s examine a circuit that makes this new type of error possible.

Hierarchical Pattern Recognizers

In order to generate this new abstraction error and facilitate the cross hierarchy communication that is necessary to process this error, we need to build on the Pattern Recognizer from our last post.

To best understand this, we will focus on the lower half of Figure 6. This portion of the subnetwork is only relevant when the pattern is recognized, when A and B fire together, leading to Out activating. Out activating leads to the start of a new neuron loop shown with L4->L5->L6. This additional loop completes and signals error when a superpattern fails to interrupt the loop.

We should be clear that these error signals are parallel pathways to stimulation. These pathways aren't typically used for the purpose of pattern recognition. You might think that these connections are on a separate layer of the cortical sheet from the pattern recognition layer.

Abstraction error sent to subpatterns does not affect future activation subpattern NeuroBlocks, only their production of abstraction error. This separation is key to taking advantage of the modularity of NeuroBlocks. Next, let us connect this new type of error into a process that captures patterns.

Leveraging Error

In the previous post, we described a naive way to grow a network, by randomly creating patterns. With this new type of error, we can take a more directed approach. By creating a network of Hierarchical Pattern Recognizer NeuroBlocks, every element of the network can generate this error. Extension of the network can be provided by a simple feedback loop that samples abstraction error to select NeuroBlocks (that have produced abstraction error) as sources of new patterns. By filtering abstraction error that is both physically and temporally proximate, we can create stable, hierarchical networks. The creation of new NeuroBlocks reduces future error in the network by capturing abstraction error patterns.

If we think about the network growing over time, it is capturing the experience of past patterns in the network. A profound way to think about abstraction error is as novelty. The network grows as the direct result of novelty, capturing new patterns to reduce future novelty. Thinking about abstraction error as novelty opens additional uses for this error to drive the network.

Putting Elements Together

We have now described the elements for a neocortex emulation that is able to autonomously construct a hierarchical network capable detecting patterns that fail and patterns that are novel. By leveraging this as an error signal to change the network, to add and remove NeuroBlocks, we can create a feedback system that reduces error in the network over time. Pattern recognition is far from the only computation occurring in our brain, but it is a powerful foundation for additional computation. In the next post, we are going to delve into the details of the concepts introduced here. While there is far more detail to be explored in implementation, the simplicity of this approach conveys its power.

Mountcastle, Vernon B. "Modality and topographic properties of single neurons of cat's somatic sensory cortex." Journal of neurophysiology 20.4 (1957): 408-434.

By User:Wiso - from en:Image:Neural network example.svg, vetorialization of en:Image:Neural network example.png, Public Domain, https://commons.wikimedia.org/w/index.php?curid=5084582